Real-time energy tracking with Python / PHP, GAE, MySQL and Google Cloud Platform: A practical guide

This article explains how to build a web application using Python and PHP on Google App Engine with MySQL on Google Cloud Platform. It covers architecture, deployment flow, and why this hybrid stack works well for scalable cloud apps.

I have been working on and maintaining a product for Green Energy Management for over seven years. The energy industry is still in its nascent stages in India, which means frequent updates to the business logic are necessary to keep up with evolving requirements.

The system is built using the standard MVC (Model-View-Controller) architecture, designed to accommodate enhancements and updates easily. It manages over 100+ power producers and consumers.

Power producers are entities that own Wind Energy Generators (WEGs)—the massive wind turbines that generate electricity and Solar Power Generators (SPGs). This electricity is fed into a local power station, and a reverse meter (opposite to the standard home electricity meter) tracks the units generated. At the end of each month, the Government Electricity Board generates a detailed bill based on the power generation data.

Unlike typical home electricity bills, these bills are highly detailed. The 24-hour day is divided into five time slots, each with a different rate for electricity generation.

On the other end, we have consumers located in different areas, consuming power from different grids. The power generation and consumption can be offset against each other.

Recently, the Government proposed a change: instead of tracking power generation in five time slots per day, it must now be tracked in 15-minute intervals. This requires a real-time, continuous monitoring and tracking system.

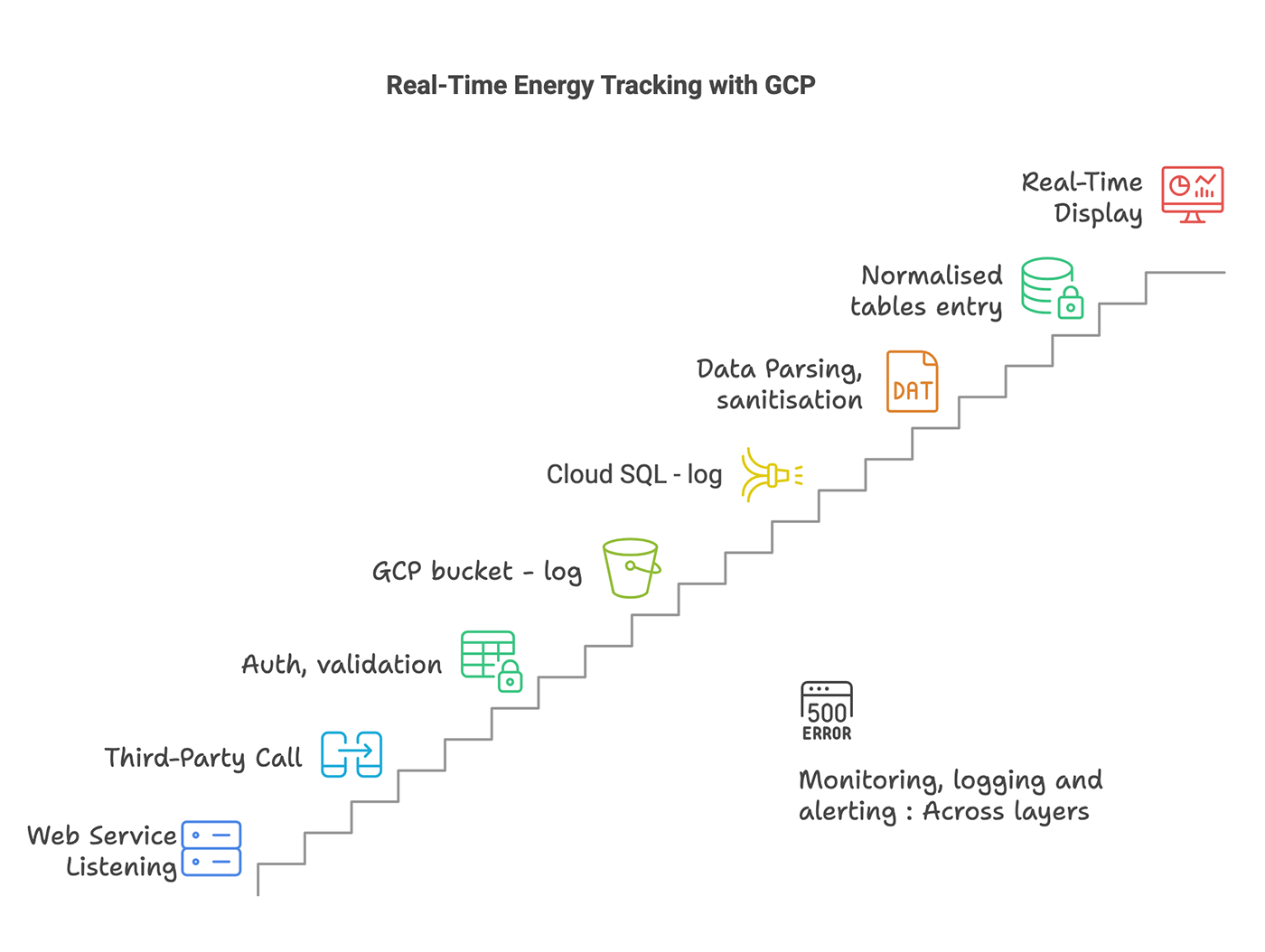

Project Plan for building with Python, PHP, GAE, and MySQL on GCP

- Hardware Upgrade: As a first step, we replaced the existing meters with advanced meters equipped with software capabilities.

- Data Collection: Every minute, the new meters post generation data and other relevant metrics to a web service endpoint.

- Web Service: We exposed a web service endpoint to receive the POSTed data and write it to a persistent storage system.

- Data Processing: The collected data is converted into a usable format and stored appropriately.

- Application Customization: Then customize our custom ERP application to work on a 15 minute slot data.

The immediate goal is to eliminate manual user input for generation data and replace it with an automated, real-time data capture system from the WEGs. This system must be highly available, reliable, resilient, secure, and robust—every aspect is critical.

After extensive deliberation, we chose Google Cloud Platform (GCP) as our infrastructure provider.

Technology Stack: Python, PHP, GAE, Cloud Storage, and MySQL

- Web Framework: Python / PHP.

- Google App Engine (GAE): For hosting the web service (Python) and application logic (PHP).

- Cloud Storage Buckets: For persistent storage of raw, un-processed data. Firstline backup.

- Managed MySQL (Cloud SQL): For secondline redundant backup. Then for normalized data storage and retrieval.

- Logging: A centralized adapter on GCP logger.

- Monitoring: GCP cloud monitoring with custom alerts.

- Google Pub/Sub: For decoupling data ingestion and processing. Postponed for first enhancement.

We decided against using Compute Engine at this stage to avoid the overhead of infrastructure management. Our focus is on getting the application up and running efficiently while leveraging GCP's managed services.

Implementation Flow with Python, Cloud Storage, and Cloud SQL

The application starts with a web service that listens for POST requests.

Web Service

- HTTPS: Secure communication is enforced using HTTPS.

- POST Requests: The service accepts only POST requests, and it validates that the incoming request is of the POST type.

- Authentication: Requests are authenticated using an API key. These keys are securely stored in Secret Manager.

- Integrity Checks: SHA-based integrity checks are performed to ensure the content has not been tampered with during transit or suffered any data loss.

- Data Validation and Sanitization: All incoming data is validated and sanitized to prevent injection attacks.

- Logging: Requests are logged to Google Cloud Platform (GCP) using structured logging for better traceability and analysis.

Data backup

The data is first written to a GCP Storage bucket in its raw, unaltered form. A unique request ID is generated for each request, and the data is stored along with this request ID and a timestamp for traceability purposes.

As a redundant secondary storage measure, the same data is also written to a Cloud SQL database table (MySQL). The database entry includes the same request ID for consistency and mapping.

These two storage mechanisms are not used transactionally within the application. Instead, they serve as one-time logs and are intended for use only in the event of a failure or for auditing purposes.

Transactional data

The received data is parsed, validated, sanitized and then stored in normalized Cloud SQL database tables. These are for transactional pursposes. The reporting ERP application will use these data.

Input data validation

- Data type checks: Ensured the data matched the expected type (e.g., string, integer, boolean).

- Format validation: Used regular expressions to validate formats (e.g., meter IDs).

- Length limits: Verified that the content did not exceed allowed lengths.

- Required fields checks: Confirmed that all mandatory fields were present and not empty.

- Range validation: For numerical data, ensured values fell within an acceptable range (e.g., consumption units).

- Allowlists: Only accepted known good values (e.g., voltage).

On validation failure, the request is logged to the error channel with all relevant details, including the reason for failure. The process terminates at this point, as no further action is required.

Since the format of the call is designed to handle one record per request, there is no need to proceed if validation fails.

In data sanitization, just a couple of steps. Remove leading and trailing whitespaces from all input data. Identify and handle special characters where they are not expected (e.g., in numeric fields or specific formats).

Error logging

A centralized error logger acts as an adapter over the GCP logger. A unique request ID is generated for every request and is prefixed to all error logs for traceability.

Monitoring

Alerts for failed requests are configured using GCP's monitoring tools (Cloud Monitoring). Failures can occur due to various reasons, including:

- Authentication failure.

- Improper request method (e.g., non-POST requests).

- Malformed request payload.

- Data validation failure.

- Errors during sanitization.

- Abuse detection due to suspicious request rates.

- Storage bucket or database write failures.

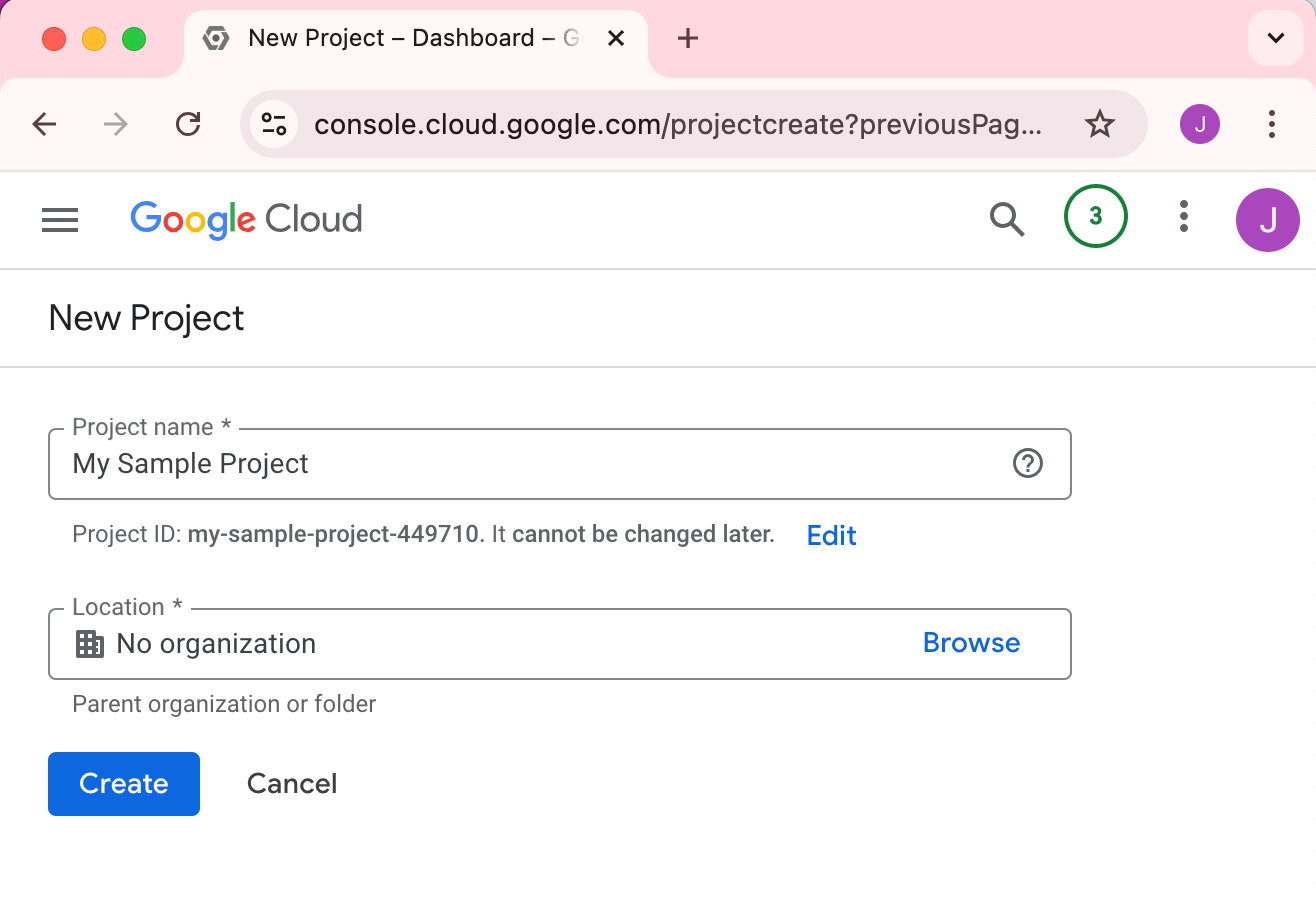

Create a Google Cloud Platform Project for App Engine, Cloud Storage, and Cloud SQL

Before we start, we need to create a new project in GCP. This project will host all our resources, including App Engine, Cloud Storage, Cloud SQL, and other services. You need a Google account to create a project.

You will also need a credit card to create a billing account. Google provides a free credit of around $300 USD, which may vary depending on your location. This free credit will expire in three months, after which your credit card will be charged.

This free credit will be more than sufficient for the majority of projects unless you are doing AI projects and training your model.

Here's how you can create a new project in GCP:

Log in to the Google Cloud panel. Refer to the image below; it should be self-explanatory.

Click on the "Select a project" dropdown at the top of the page. You will see a "New Project" button. Click on it to create a new project.

Enter the project name and click on the "Create" button. You can also change the organization if you have multiple organizations.

Once the project is created, you will be redirected to the project dashboard. You can see the project ID, project number, and other details here.

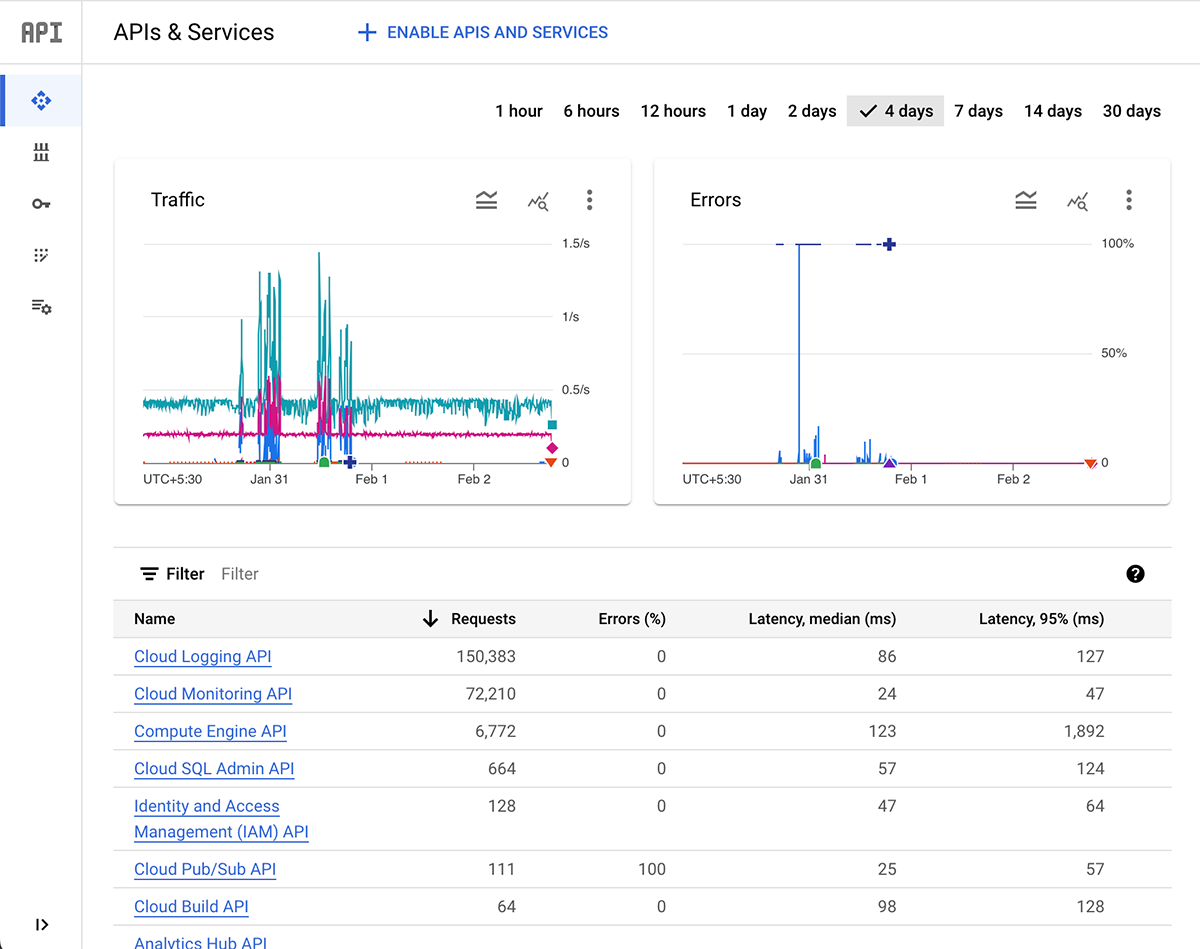

Enable App Engine, Cloud Storage, Cloud SQL, and Secret Manager APIs

Before we start creating resources, we need to enable the required APIs. We need to enable the following APIs:

- App Engine API

- Cloud Storage API

- Cloud SQL API

- Secret Manager API

- Cloud Logging API

- Cloud Monitoring API

Here's how you can enable GCP APIs:

Click on the "Navigation menu" on the top left corner of the page. You will see the "APIs & Services" menu. Click on it.

Click on the "Dashboard" link. You will see a "Enable APIs and Services" button. Click on it.

Search for the required APIs and enable them. You can search for the APIs by name or use the search bar to find the required APIs.

The traffic spikes you see are from periods of intense work on the application, during which I repeatedly redeployed and tested it.

Once you enable the APIs, you can start creating resources in GCP.

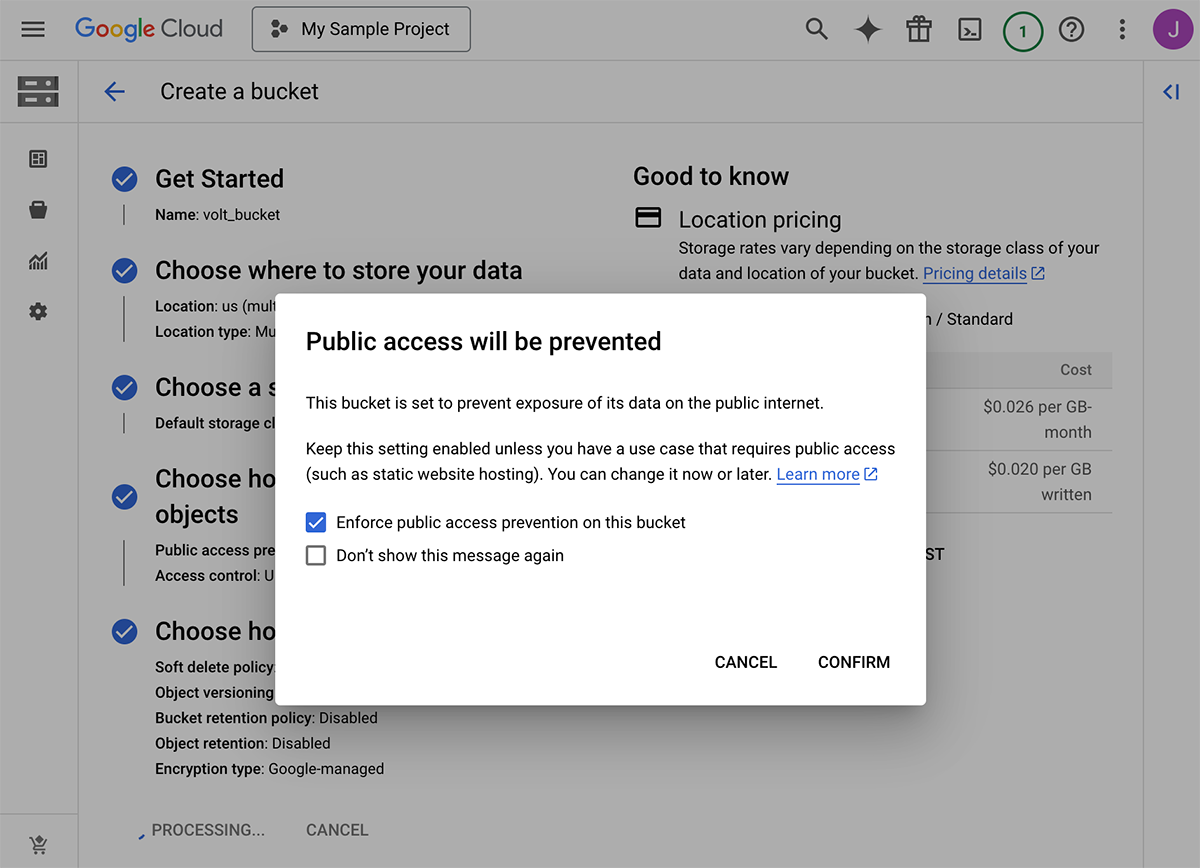

Create a Google Cloud Storage Bucket for Raw Data

Cloud Storage is a scalable, fully managed object storage service that is highly available and durable. You can store any type of data in Cloud Storage, including images, videos, and other files.

Here's how you can create a Cloud Storage bucket:

Click on the "Navigation menu" on the top left corner of the page. You will see the "Storage" menu. Click on it.

Click on the "Browser" link. You will see a "Create Bucket" button. Click on it.

Enter the bucket name, choose the location, and click on the "Create" button. You can also choose the storage class and other settings based on your requirements.

Once the bucket is created, you can start uploading files to the bucket. You can also set up lifecycle policies, versioning, and other settings for the bucket.

To use Google Cloud Storage (GCS) in a project:

Run the following command to list the buckets in the project:

gsutil lsThis will list all the buckets in the project. You can also run other gsutil commands to manage objects in the buckets.

Run the following command to copy a file to a bucket:

gsutil cp [SOURCE_FILE] gs://[BUCKET_NAME]/[DESTINATION_FILE]Example:

gsutil cp file.txt gs://my-bucket/file.txtThis will copy the file.txt file to the my-bucket bucket in GCS.

Assign roles:

gcloud projects add-iam-policy-binding [PROJECT_ID] --member=user:[EMAIL] --role=[ROLE]Example:

gcloud projects add-iam-policy-binding project-sample-412306a7 --member=user:

[email protected] --role=roles/storage.adminReplace [PROJECT_ID], [EMAIL], and [ROLE] with your actual project ID, email

address, and role.

These are some of the basic commands you can run in Cloud Shell to manage your GCP resources. You can explore more

commands and options by running gcloud --help or visiting the gcloud documentation.

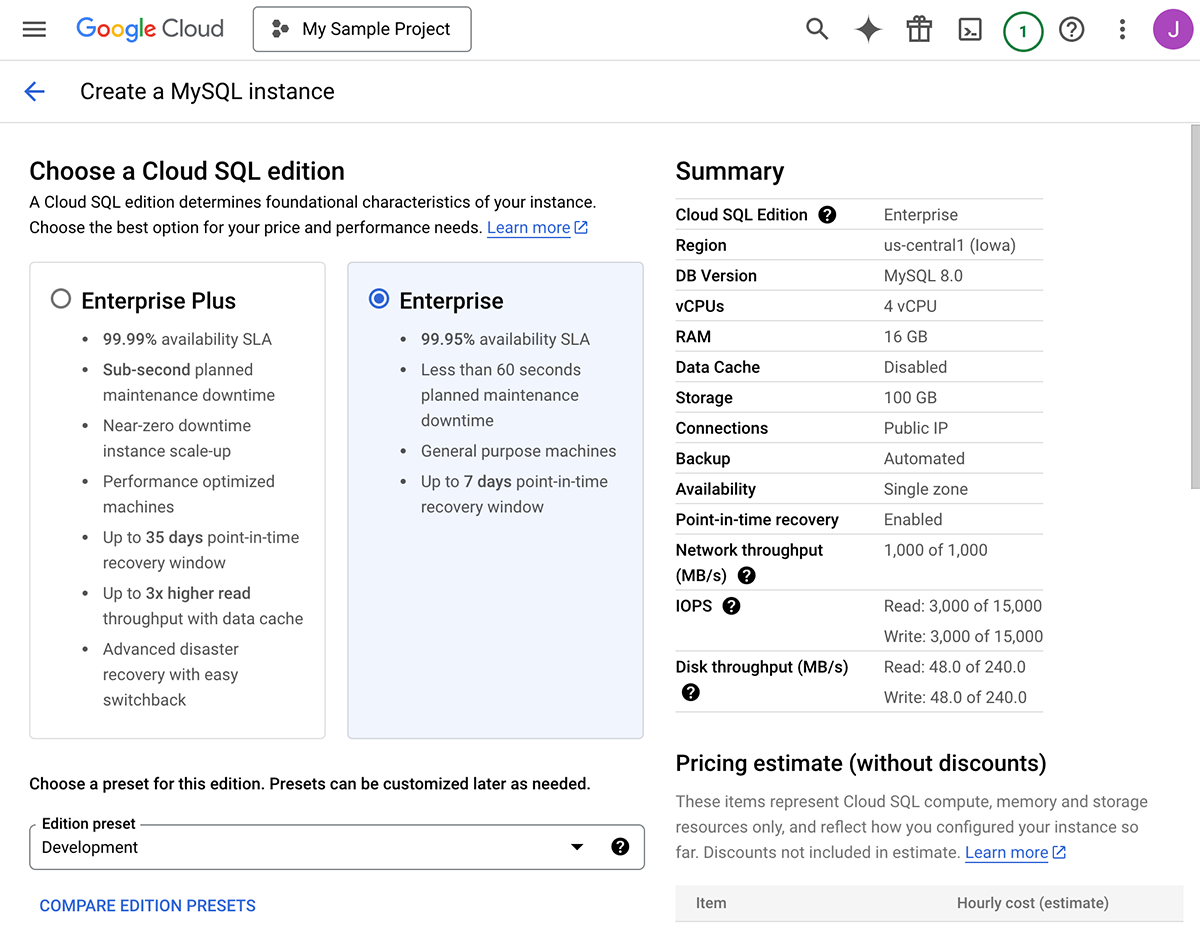

Create a Cloud SQL (MySQL) Instance on Google Cloud

Cloud SQL is a fully managed relational database service that makes it easy to set up, maintain, manage, and administer your MySQL, PostgreSQL, or SQL Server databases in the cloud.

Here's how you can create a Cloud SQL instance:

Click on the "Navigation menu" on the top left corner of the page. You will see the "SQL" menu. Click on it.

Click on the "Create Instance" button. You will see options to create a MySQL, PostgreSQL, or SQL Server instance. Choose the database engine you want to use.

Compute Engine API is required to enable some of the Cloud SQL features. Though you will not be using Compute Engine directly, you need to enable it to use some of the Cloud SQL features. There will not be any cost associated with it.

Enter the instance ID, choose the database version, and set up the root password. You can also choose the region, machine type, and other settings based on your requirements.

Once the instance is created, you can connect to the instance using the Cloud SQL Proxy or other tools. You can also create databases, users, and tables in the instance.

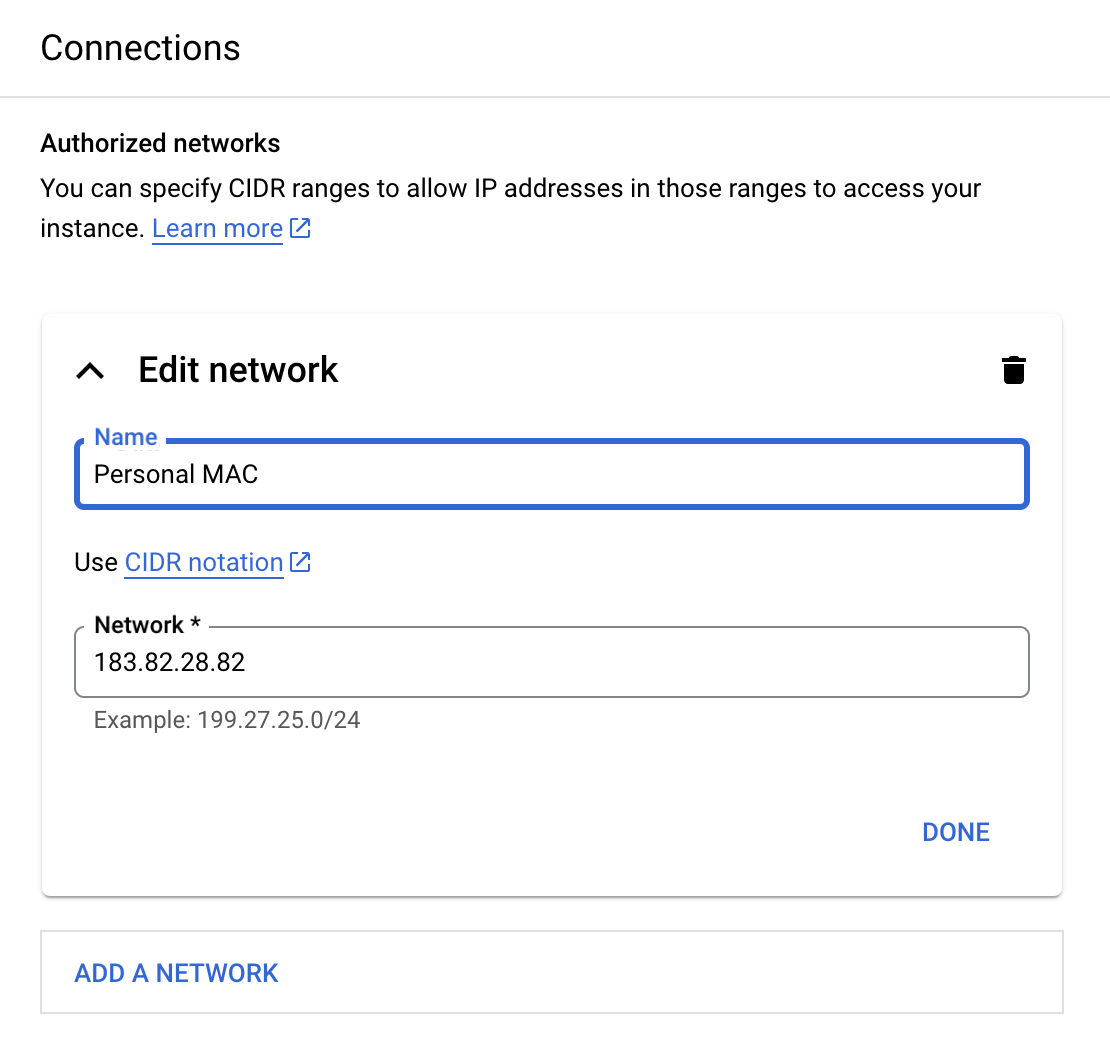

How to connect to database using a client (DBeaver)

Download and install DBeaver, a free and open-source database tool that supports MySQL, PostgreSQL, and other databases.

You should authorize your machine for access to get cloud sql connection. Find your IP address and add it in authorized networks list and save the settings. Save button is at the bottom of the page, I wasted an hour believing that its AJAX based and assumed its saved.

Open DBeaver and click on the "Database" menu. You will see options to create a new connection. Click on it.

Choose the database type (MySQL, PostgreSQL, etc.) and enter the connection details (host, port, username, password, etc.).

Click on the "Test Connection" button to test the connection. If the connection is successful, click on the "Finish" button to save the connection.

Once the connection is saved, you can see the database in the DBeaver interface. You can expand the database to see the tables, views, and other objects in the database.

You can now run SQL queries, create databases, users, and tables, and perform other tasks in the database using DBeaver.

Deploy the Python/PHP Application to Google App Engine

App Engine is a fully managed serverless platform that allows you to build and deploy applications on Google's infrastructure. You can deploy applications written in Python, PHP, Java, Go, and other languages on App Engine.

Here's how you can deploy an application on App Engine:

Click on the "Navigation menu" on the top left corner of the page. You will see the "App Engine" menu. Click on it.

Click on the "Create Application" button. You will see options to create an application using Python, PHP, Java, Go, or other languages. Choose the language you want to use.

Enter the application ID, choose the region, and click on the "Create" button. You can also choose the runtime, memory allocation, and other settings based on your requirements.

Once the application is created, you can deploy your code to the application using the gcloud command-line tool or the Cloud Console. You can also set up custom domains, SSL certificates, and other settings for the application.

Use Google Cloud Shell for GCP, App Engine, and Cloud SQL

Cloud Shell is a free-to-use browser-based command-line tool that allows you to manage your GCP resources from the browser. You can use Cloud Shell to run gcloud commands, deploy applications, and perform other tasks on GCP.

Here's how you can use Cloud Shell:

Click on the "Activate Cloud Shell" button on the top right corner of the page. You will see a terminal window open at the bottom of the page.

You can run gcloud commands, deploy applications, and perform other tasks in the Cloud Shell. You can also use the Cloud Shell Editor to edit code, view logs, and debug applications.

Cloud Shell provides 5 GB of persistent disk storage, which allows you to store files and data across sessions. You can also customize the Cloud Shell environment by installing additional tools and libraries.

To authorize Cloudshell access to project:

Run the following command in Cloud Shell to set the project:

gcloud config set project [PROJECT_ID]Example:

gcloud config set project project-sample-412306a7Replace [PROJECT_ID] with your actual project ID.

To authenticate and authorize Cloud Shell, run:

gcloud auth loginThis will open a browser window where you can log in with your Google account and authorize access to the project.

Connect cloud sql with shell

Though using a database client is convenient, for a quick check or to execute regular queries, shell is convenient.

Run the following command to connect to the Cloud SQL instance:

gcloud sql connect [INSTANCE_ID] --user=rootExample:

gcloud sql connect my-instance --user=rootReplace [INSTANCE_ID] with your actual Cloud SQL instance ID.

Once connected, you can run SQL queries, create databases, users, and tables, and perform other tasks in the Cloud SQL instance.

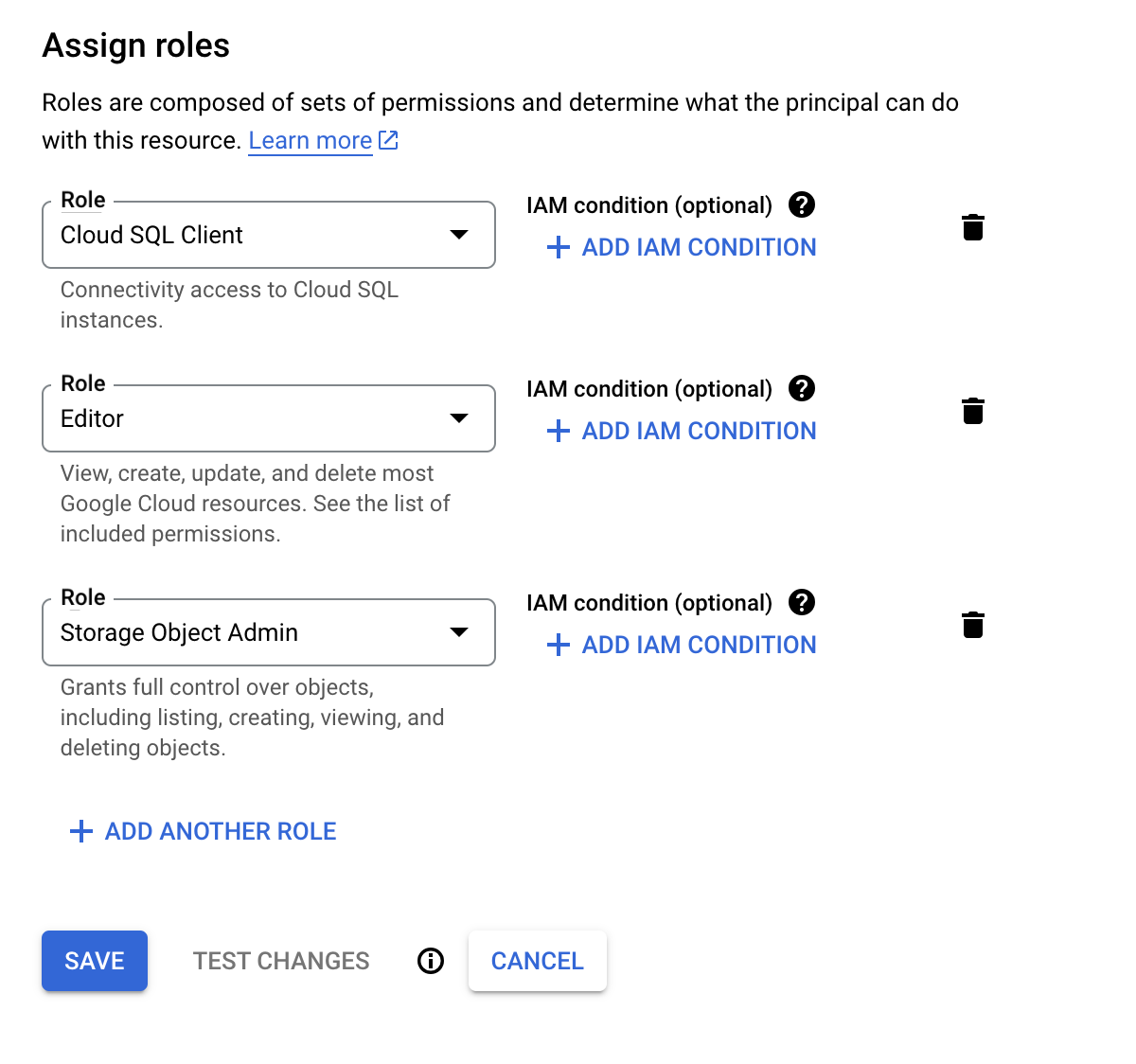

IMPORTANT: Add IAM roles for GAE project to access Cloud SQL:

To allow your App Engine application to access your Cloud SQL instance, you need to assign the appropriate IAM roles to the App Engine service account.

Here's how you can add IAM roles for your App Engine project:

- Go to the IAM & Admin page in the Google Cloud Console.

- Find the App Engine default service account. It usually has the format

[PROJECT_ID]@appspot.gserviceaccount.com. - Click on the pencil icon next to the service account to edit its roles.

- Click on "Add Another Role" and select the following roles:

- Cloud SQL Client: This role allows the service account to connect to Cloud SQL instances.

- Cloud SQL Admin (optional): This role allows the service account to manage Cloud SQL instances.

- Click "Save" to apply the changes.

Once the roles are assigned, your App Engine application will be able to access the Cloud SQL instance using the service account.

Python Web Service to POST Real-Time Data to Cloud Storage and Cloud SQL

We have seen enough of the guide. It has already become too long. Let's jump into the code and see the moving parts.

Here is a simple Python web service that listens for POST requests and writes the data to a Cloud SQL database and a Cloud Storage bucket.

It is a simple Flask application that listens for POST requests on the /data endpoint. The

incoming

data is validated, sanitized, and stored in a Cloud SQL database and a Cloud Storage bucket.

Make sure to install the required libraries using pip:

pip install flask sqlalchemy pymysql google-cloud-storage- Flask: Used to handle HTTP requests.

- SQLAlchemy: Used for database interactions.

- Google Cloud Storage client library for storing data in Google Cloud Storage.

<from flask import Flask, request, jsonify

from google.cloud import storage

from datetime import datetime

import logging

import os

from sqlalchemy import create_engine, Column, Integer, String, DateTime, Text

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy.orm import sessionmaker

app = Flask(__name__)

# Configuration

DATABASE_URI = 'mysql+pymysql://root:password@localhost/your_database'

GCS_BUCKET_NAME = 'positive-apex-448606-f7.appspot.com'

from logging.handlers import RotatingFileHandler

# Setup rolling file handler for logging

handler = RotatingFileHandler(LOG_FILE, maxBytes=2000, backupCount=5)

handler.setLevel(logging.INFO)

app.logger.addHandler(handler)

STORAGE_FILENAME = 'data.txt'

LOG_FILE = 'app.log'

# Setup logging

logging.basicConfig(filename=LOG_FILE, level=logging.INFO)

# Setup SQLAlchemy

Base = declarative_base()

engine = create_engine(DATABASE_URI)

Session = sessionmaker(bind=engine)

session = Session()

class LiveData(Base):

__tablename__ = 'tbl_live_data'

id = Column(Integer, primary_key=True)

received_ts = Column(DateTime)

data = Column(Text)

meta = Column(Text)

class StructuredMaster(Base):

__tablename__ = 'tbl_structured_master'

id = Column(Integer, primary_key=True)

live_data_id = Column(Integer)

received_ts = Column(DateTime)

datasourceid = Column(String(255))

filename = Column(String(255))

create_ts = Column(DateTime)

class StructuredDetail(Base):

__tablename__ = 'tbl_structured_detail'

id = Column(Integer, primary_key=True)

structured_master_id = Column(Integer)

received_ts = Column(DateTime)

datasourceid = Column(String(255))

data_key = Column(String(255))

data_value = Column(String(255))

create_ts = Column(DateTime)

def get_post_param(key):

return request.form.get(key)

def store_to_gcs(data):

client = storage.Client()

bucket = client.bucket(GCS_BUCKET_NAME)

blob = bucket.blob(STORAGE_FILENAME)

if blob.exists():

current_content = blob.download_as_text()

else:

current_content = ''

new_content = current_content + data + '\n'

blob.upload_from_string(new_content)

def store_to_mysql_live_data(session, received_ts, data, meta):

live_data = LiveData(received_ts=received_ts, data=data, meta=meta)

session.add(live_data)

session.commit()

return live_data.id

def extract_meta_data(meta):

parts = meta.split(';')

datasourceid = None

filename = None

for part in parts:

if part.startswith('datasourceid='):

datasourceid = part[len('datasourceid='):]

elif part.startswith('filename='):

filename = part[len('filename='):]

return {'datasourceid': datasourceid, 'filename': filename}

def store_to_mysql_structured_master(session, live_data_id, received_ts, meta):

extracted_meta = extract_meta_data(meta)

datasourceid = extracted_meta['datasourceid']

filename = extracted_meta['filename']

create_ts = datetime.now()

structured_master = StructuredMaster(

live_data_id=live_data_id,

received_ts=received_ts,

datasourceid=datasourceid,

filename=filename,

create_ts=create_ts

)

session.add(structured_master)

session.commit()

return structured_master.id

def store_to_mysql_structured_detail(session, structured_master_id, received_ts, meta, data):

extracted_meta = extract_meta_data(meta)

datasourceid = extracted_meta['datasourceid']

lines = data.strip().split('\n')

keys = lines[0].split(',')

values = lines[1].split(',')

for index, key in enumerate(keys):

if key == 'HTmeter01_kWh_Total_Import':

create_ts = datetime.now()

structured_detail = StructuredDetail(

structured_master_id=structured_master_id,

received_ts=received_ts,

datasourceid=datasourceid,

data_key=key,

data_value=values[index],

create_ts=create_ts

)

session.add(structured_detail)

session.commit()

def store_to_mysql(data, meta):

received_ts = datetime.now()

live_data_id = store_to_mysql_live_data(session, received_ts, data, meta)

if not live_data_id:

logging.error("Failed to store data in MySQL - store_to_mysql_live_data")

return False

structured_master_id = store_to_mysql_structured_master(session, live_data_id, received_ts, meta)

if not structured_master_id:

logging.error("Failed to store data in MySQL - store_to_mysql_structured_master")

return False

store_to_mysql_structured_detail(session, structured_master_id, received_ts, meta, data)

return True

@app.route('/process_request', methods=['POST'])

def process_request():

if request.method != 'POST':

logging.error("Invalid request method")

return "Invalid request method. Only POST is allowed.", 400

data = get_post_param('data')

meta = get_post_param('meta')

if not data:

logging.error("Missing data")

return "Invalid request. Missing data.", 400

# Backup storage to Google Cloud Storage Default Bucket

store_to_gcs(meta + ':' + data)

if not store_to_mysql(data, meta):

return "Data storage error", 500

return "OK", 200

if __name__ == '__main__':

app.run(debug=True)>This code defines a Flask application that listens for POST requests on the /process_request endpoint.

The

incoming data is validated, sanitized, and stored in a Cloud SQL database and a Cloud Storage bucket.

The store_to_gcs function stores the incoming data in a Cloud Storage bucket. The

store_to_mysql

function stores the data in a MySQL database using SQLAlchemy.

The process_request function handles the incoming POST requests, validates the data, and

calls the

storage

functions to store the data in Cloud SQL and Cloud Storage.

Make sure to replace the DATABASE_URI and GCS_BUCKET_NAME

variables with your actual

database

URI and bucket name.

Run the Flask application using the following command:

python app.pyThis will start the Flask application on http://localhost:5000. You can now send POST

requests to the

/process_request endpoint to store data in Cloud SQL and Cloud Storage.

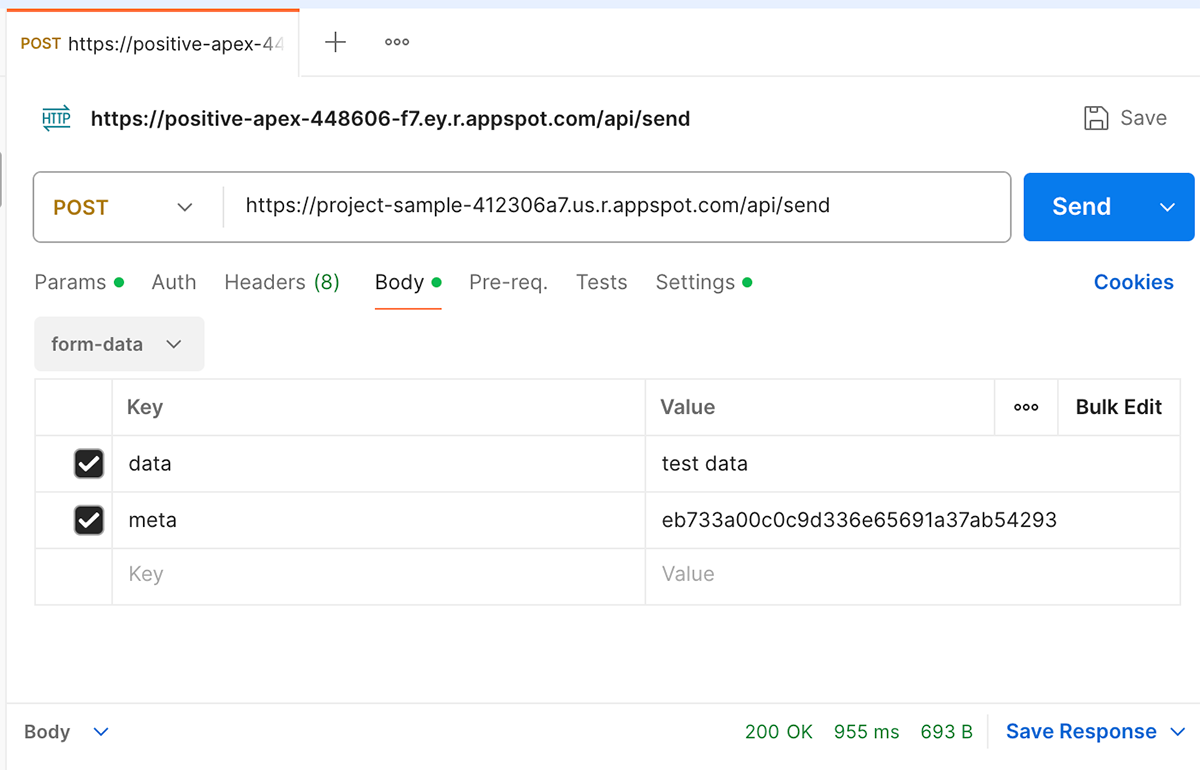

How to test the webservice

It is better to test the application in your local environment before you deploy it in Google Cloud. Because the deployment process is time-consuming and you may need to make changes to the code multiple times.

Use a tool like Postman to send POST requests to the /process_request endpoint. You can

send JSON data

in the request body with the data and meta fields (or use the

key-value pair in the Body).

Here's an example of a POST request:

POST /process_request HTTP/1.1

Host: localhost:5000

Content-Type: application/json

{

"data": "HTmeter01_kWh_Total_Import,HTmeter01_kWh_Total_Export\n100,50",

"meta": "datasourceid=12345;filename=data.csv"

}This request sends the data HTmeter01_kWh_Total_Import,HTmeter01_kWh_Total_Export\n100,50

with the meta

data datasourceid=12345;filename=data.csv to the /process_request endpoint.

That's it! You have successfully created a Python web service that listens for POST requests and stores the data in Google Cloud Platform services.

Deploy the Python Web Service to Google App Engine (GAE)

Now that you have tested the application in your local environment, it's time to deploy it to Google App Engine. The deployment process is straightforward and can be done using the gcloud command-line tool.

Here's how you can deploy the application to Google App Engine:

app.yaml to deploy in Google App Engine

You need the following YAML file to deploy in GAE.

runtime: python

env: flex

runtime_config:

operating_system: "ubuntu22"

python_version: "3.8"

handlers:

- url: /.*

script: auto

env_variables:

APP_ENV: production

DB_HOST: /cloudsql/project-sample-412306a7:europe-west4:xyz

DB_NAME: test_sample_db

DB_USER: db_usr_name

DB_PASSWORD: 'abcd1234'

beta_settings:

cloud_sql_instances: project-sample-412306a7:europe-west4:xyzReplace the DB_HOST, DB_NAME, DB_USER,

and DB_PASSWORD with

your actual database details.

Run the following command to deploy the application:

gcloud app deployThis command will deploy the application to Google App Engine. You can access the application using the URL provided after the deployment is complete.

Make sure to replace the DATABASE_URI and GCS_BUCKET_NAME

variables with your actual

database

URI and bucket name before deploying the application.

Once the application is deployed, you can send POST requests to the /process_request

endpoint to store

data in Cloud SQL and Cloud Storage.

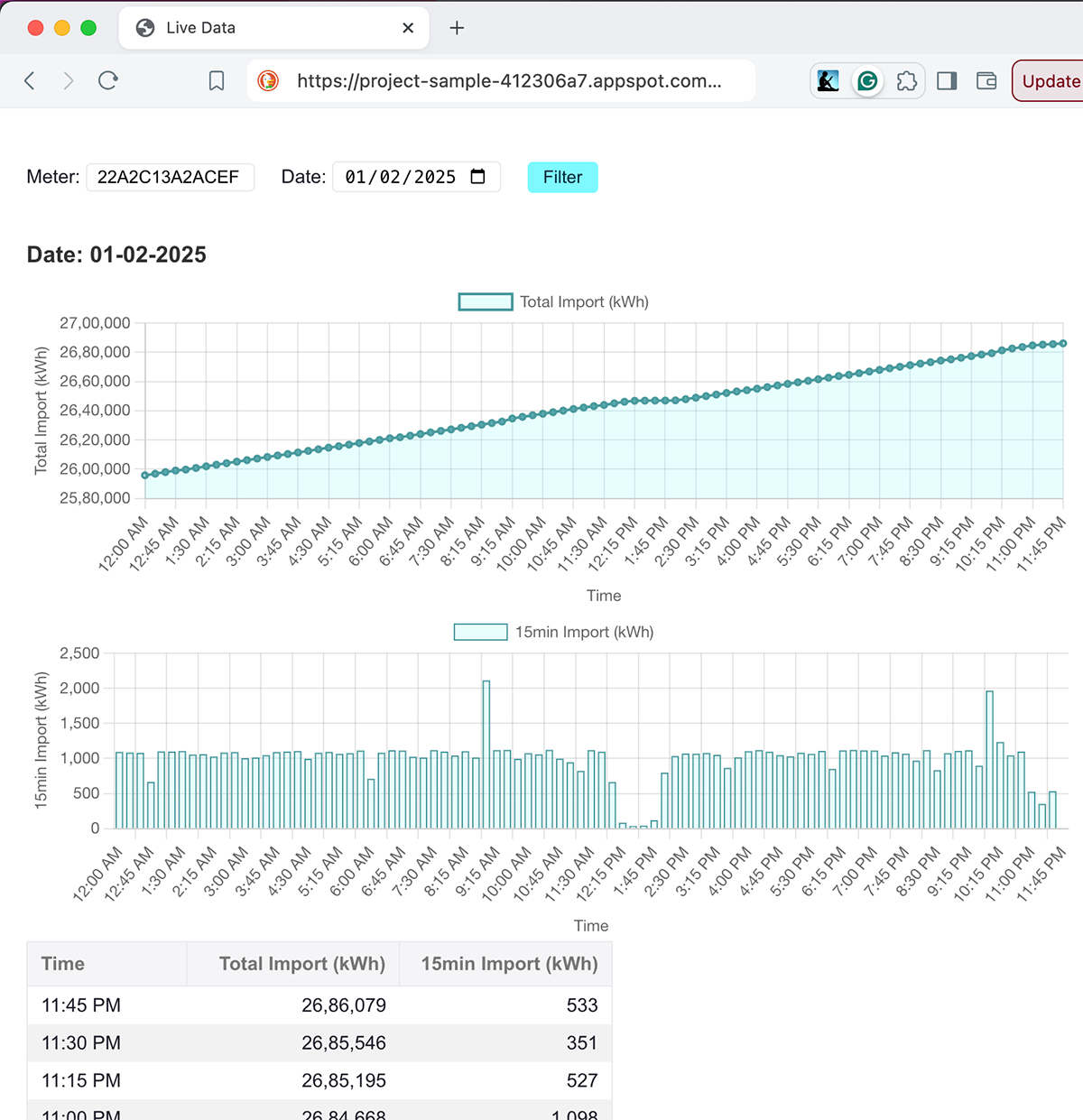

PHP Web Application to Display Real-Time Data from Cloud SQL

Now that you have a Python web service that stores the real-time data in Google Cloud Platform services, you can create a PHP web application to display the data in real-time.

Here is a simple PHP web application that fetches the data from the Cloud SQL database and displays it on a web page. I am purposely keeping it simple here as we have already gone over and about the limit with this guide.

Showing real-time data in a monitoring dashboard using charts is an art by itself. I will write a separate detailed article on how we tamed that beast.

Make sure to install the required libraries using Composer:

composer require google/cloudHere is the PHP code to fetch the data from the Cloud SQL database:

<?php

include __DIR__ . '/Config.php';

include __DIR__ . '/Logger.php';

include __DIR__ . '/DB.php';

function formatIndianNumber($number)

{

if ($number === '') {

return '';

}

$number = (string)$number;

$decimal = '';

if (strpos($number, '.') !== false) {

list($number, $decimal) = explode('.', $number);

}

$lastThree = substr($number, -3);

$restUnits = substr($number, 0, -3);

if ($restUnits != '') {

$restUnits = preg_replace("/\B(?=(\d{2})+(?!\d))/", ",", $restUnits);

$formattedNumber = $restUnits . ',' . $lastThree;

} else {

$formattedNumber = $lastThree;

}

if ($decimal != '') {

$formattedNumber .= '.' . $decimal;

}

return $formattedNumber;

}

$datasourceid = $_GET['datasourceid'] ?? '';

$date = $_GET['date'] ?? date('Y-m-d');

$mysqli = DB::getMysqlConnection();

if ($mysqli === false) {

Logger::logMessage("ERROR: Failed to connect to MySQL");

exit;

}

try {

$sql = "SELECT received_ts, data_value FROM tbl_structured_detail

WHERE datasourceid = ?

AND data_key = 'HTmeter01_kWh_Total_Import'

AND DATE(received_ts) = ?

AND MINUTE(received_ts) IN (0, 15, 30, 45)

ORDER BY received_ts DESC";

$stmt = $mysqli->prepare($sql);

if ($stmt === false) {

Logger::logMessage("ERROR: Error preparing statement: " . $mysqli->error);

$mysqli->close();

exit;

}

$stmt->bind_param("ss", $datasourceid, $date);

if ($stmt->execute()) {

$result = $stmt->get_result();

$data = [];

while ($row = $result->fetch_assoc()) {

$data[] = [

'time' => (new DateTime($row['received_ts']))->format('g:i A'),

'value' => (float)$row['data_value']

];

}

} else {

Logger::logMessage("ERROR: Error executing statement: " . $stmt->error);

$stmt->close();

$mysqli->close();

exit;

}

} catch (Exception $e) {

Logger::logMessage("ERROR: Exception in storing in database: " . $e->getMessage());

exit;

}

?>

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Live Data</title>

<script src="https://cdn.jsdelivr.net/npm/chart.js"></script>

<style>

body {

background-color: #fff;

font-family: Arial, Helvetica Neue, Helvetica, sans-serif;

color: #1E1E2A;

-webkit-font-smoothing: antialiased;

margin: 0;

padding: 0;

font-size: .9rem;

}

.container {

margin: 0 auto;

padding: 40px 20px;

}

.row {

display: inline-block;

margin-bottom: 20px;

}

table {

border: 1px solid #e9eaee;

table-layout: auto;

border-collapse: collapse;

}

table th {

white-space: nowrap;

overflow: hidden;

padding: 8px 10px;

text-align: left;

color: #757575;

border: #e9eaee 1px solid;

background-color: #f5f5f7;

}

tr:nth-child(even) {

background-color: #f2f2f2;

}

table td {

white-space: nowrap;

overflow: hidden;

border: none;

padding: 6px 10px;

}

tr:hover td {

background-color: rgb(236 236 236);

}

h3 {

font-size: 1.2em;

color: #333;

margin-bottom: 10px;

}

input[type="text"],

input[type="date"] {

border-radius: 4px;

border: 1px solid #e9eaee;

padding: 2px 8px;

width: 110px;

}

button {

border: 0;

background-color: #7df9ff;

padding: 4px 12px;

border-radius: 4px;

color: #002b3d;

font-weight: 500;

}

button:hover {

cursor: pointer;

background-color: #4fffd6;

}

.chart-container {

height: 250px;

}

</style>

</head>

<body>

<div class="container">

<form method="GET">

<div class="row">

<label>Meter:</label>

<input style="margin-right: 16px;" type="text" name="datasourceid" value="<?php echo htmlspecialchars($datasourceid); ?>" required>

</div>

<div class="row">

<label>Date:</label>

<input style="margin-right: 16px;" type="date" name="date" value="<?php echo htmlspecialchars($date); ?>">

</div>

<button type="submit">Filter</button>

</form>

<h3>Date: <?php echo (new DateTime($date))->format('d-m-Y'); ?></h3>

<!-- Chart Container -->

<div class="chart-container">

<canvas id="timeSeriesChart"></canvas>

</div>

<!-- Bar Chart Container -->

<div class="chart-container">

<canvas id="barChart"></canvas>

</div>

<table border="1">

<thead>

<tr>

<th style="width: 100px;">Time</th>

<th style="width: 140px;text-align:right">Total Import (kWh)</th>

<th style="width: 140px;text-align:right">15min Import (kWh)</th>

</tr>

</thead>

<tbody>

<?php

for ($i = 0; $i < count($data); $i++) {

if ($i === count($data) - 1) {

$difference = '';

} else {

$difference = round($data[$i]['value'] - $data[$i + 1]['value']);

}

?>

<tr>

<td><?php echo htmlspecialchars($data[$i]['time']); ?></td>

<td style="width: 140px;text-align:right"><?php echo htmlspecialchars(formatIndianNumber(round($data[$i]['value']))); ?></td>

<td style="width: 140px;text-align:right"><?php echo htmlspecialchars(formatIndianNumber($difference)); ?></td>

</tr>

<?php

}

?>

</tbody>

</table>

</div>

<script>

// Get the data from PHP and prepare it for the chart

const chartData = <?php echo json_encode($data); ?>;

// Reverse the data for the chart only

const reversedChartData = [...chartData].reverse();

// Extract labels (time) and data (values) from the reversedChartData

const labels = reversedChartData.map(item => item.time);

const values = reversedChartData.map(item => item.value);

// Format numbers in Indian style

const indianNumberFormatter = new Intl.NumberFormat('en-IN');

// Create the line chart

const ctx = document.getElementById('timeSeriesChart').getContext('2d');

const timeSeriesChart = new Chart(ctx, {

type: 'line',

data: {

labels: labels,

datasets: [{

label: 'Total Import (kWh)',

data: values,

borderColor: '#4b9a9e',

backgroundColor: 'rgba(125, 249, 255, 0.2)',

borderWidth: 2,

fill: true,

pointRadius: 2, // Reduce the size of the point

pointHoverRadius: 4 // Reduce the size of the point on hover

}]

},

options: {

responsive: true,

maintainAspectRatio: false, // Allow custom height

scales: {

x: {

title: {

display: true,

text: 'Time'

}

},

y: {

title: {

display: true,

text: 'Total Import (kWh)'

},

ticks: {

callback: function(value) {

return indianNumberFormatter.format(value); // Indian-style formatting

}

}

}

},

plugins: {

tooltip: {

callbacks: {

label: function(context) {

let label = context.dataset.label || '';

if (label) {

label += ': ';

}

label += indianNumberFormatter.format(context.raw); // Indian-style formatting in tooltip

return label;

}

}

}

}

}

});

// Prepare data for the bar chart

const barLabels = labels;

const barValues = [];

for (let i = 0; i < values.length; i++) {

if (i === (values.length - 1)) {

barValues.push(0); // Push 0 for the last row

} else {

difference = values[i + 1] - values[i];

barValues.push(difference);

}

}

// Create the bar chart

const barCtx = document.getElementById('barChart').getContext('2d');

const barChart = new Chart(barCtx, {

type: 'bar',

data: {

labels: barLabels,

datasets: [{

label: '15min Import (kWh)',

data: barValues,

backgroundColor: 'rgba(125, 249, 255, 0.2)',

borderColor: '#4b9a9e',

borderWidth: 1

}]

},

options: {

responsive: true,

maintainAspectRatio: false, // Allow custom height

scales: {

x: {

title: {

display: true,

text: 'Time'

}

},

y: {

title: {

display: true,

text: '15min Import (kWh)'

},

ticks: {

callback: function(value) {

return indianNumberFormatter.format(value); // Indian-style formatting

}

}

}

},

plugins: {

tooltip: {

callbacks: {

label: function(context) {

let label = context.dataset.label || '';

if (label) {

label += ': ';

}

label += indianNumberFormatter.format(context.raw); // Indian-style formatting in tooltip

return label;

}

}

}

}

}

});

</script>

</body>

</html>

<?php

$stmt->close();

$mysqli->close();

?>I have used Chart.js JavaScript charting library to display two different charts. First one is a time series chart and the second one is a bar chart that shows the consumption for every 15 minute interval.

Make sure to replace the DB_HOST, DB_NAME, DB_USER, and

DB_PASSWORD

with your actual database details.

That's it! You have successfully created a PHP web application that fetches the real-time data from the Cloud SQL database and displays it on a web page.

This web application is a simple and straightforward thing and is self-explanatory. As stated above, I have given this for the sake of completeness of this guide. The real monitoring dashboard is out of scope of this guide and I will write a detailed seprate article to discuss that.

Conclusion

In this guide, we have seen how to create a Python web service that stores real-time data in Google Cloud Platform services and a PHP web application that fetches and displays the data. We have covered a lot of ground in this guide and I hope you found it helpful.

Google Cloud Platform offers a wide range of services that can be used to build and deploy applications in the cloud. By using services like Cloud SQL, Cloud Storage, and App Engine, you can create scalable and reliable applications that can handle real-time data.

Thank you for reading! Happy coding!

References:

- Google App Engine Documentation

- Google Cloud SQL Documentation

- Google Cloud Storage Documentation

- Google Cloud Logging Documentation

- Google Cloud Monitoring Documentation

- Flask Documentation

- SQLAlchemy Documentation

- Chart.js Documentation

- Sysdig: Google Cloud Platform (GCP) security best practices

- Patterns for scalable and resilient apps