Building an AI-Powered Spam Detection Layer for Form Submissions Using the ChatGPT API

I. Introduction

Form spam has long been a persistent nuisance on the web. Whether it's a contact form, signup form, or feedback submission, open input fields are always prime targets for bots and malicious users. The traditional defenses against spam—such as CAPTCHAs, honeypot fields, or regex-based keyword filters are no longer sufficient in isolation. Bots are evolving, using obfuscation techniques and even human-in-the-loop services to bypass basic anti-spam layers.

For developers building custom form workflows, especially in public-facing websites, keeping the form clean and useful requires more than just technical validation. It demands an intelligent system that can understand intent, analyze context, and detect subtle patterns that go beyond raw text filters.

That's where Large Language Models (LLMs), like OpenAI's ChatGPT, come in.

In this article, I'll walk you through how to build an AI-powered spam detection layer using the ChatGPT API, designed to plug into any custom form submission backend. Instead of relying only on static rules, I'll use the model's understanding of natural language. Decide whether a form submission looks genuine or spammy, with real-time classification at the point of submission.

This is not just about feeding a string to GPT and seeing what comes back. We'll go deep into the prompt engineering, response normalization, hybrid scoring with heuristics, cost optimizations, and abuse protection that are essential for deploying ChatGPT safely and efficiently in production.

The entire solution will be framed from the perspective of a form builder developer. Someone like me, building forms, managing backends, and maintaining data quality

By the end of the article, you'll have:

- A backend pipeline that uses ChatGPT API to analyze and classify form entries

- A hybrid scoring model that combines AI output with deterministic spam signals

- A cost-aware, fault-tolerant implementation ready for real-world production

- Let's begin by looking at the system architecture and how ChatGPT fits into the form submission lifecycle.

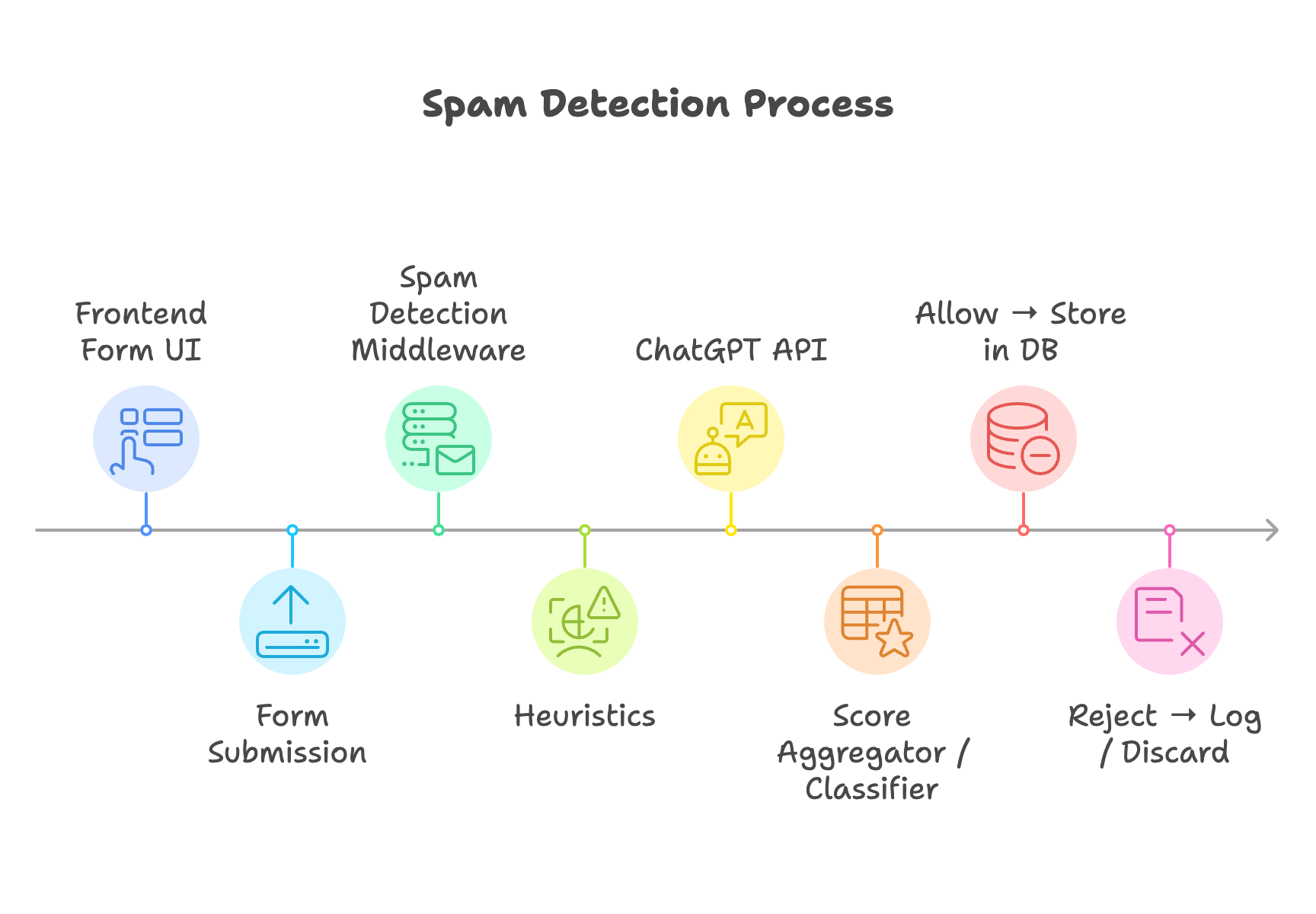

II. System Design & Architecture

To embed an intelligent spam detection layer into your form handling pipeline, it's essential to design the system in a modular and fault-tolerant way. The ChatGPT API should enhance your decision-making, not become a bottleneck or single point of failure. This is especially important for public-facing forms where user experience and data integrity are paramount.

Below is a breakdown of the system architecture and the role each component plays.

1. Frontend (Form UI)

This is your typical form interface. For example they can be contact, signup, feedback, etc forms. The spam detection logic is abstracted away from the UI, keeping it clean and independent.

- Example fields: name, email, message

- Frontend does not handle any spam filtering

- Optionally sends extra metadata (e.g., submission timestamp, client time)

2. Form Submission Endpoint (PHP Backend)

This receives the form data via POST, performs basic validation, and forwards the submission to the spam detection layer.

- Validates required fields

- Normalizes the data

- Appends metadata like client IP, headers, referrer, and submission duration

3. Spam Detection Middleware

This is a custom PHP component that encapsulates the spam logic. It acts before any database insert happens. Its job:

- Evaluate submission using both:

- Heuristic rules and ChatGPT API

- Combine the results to produce a final spam score

- Decide: allow, reject, or flag for review

4. Heuristic Rules Engine

This is a lightweight, fast filter layer that uses known static spam patterns to assign points. Example signals:

- Too many links → +2

- Contains blacklisted keywords → +3

- Form filled too quickly → +1

- Disposable email domain → +2

These rules act as the first line of defense and provide redundancy in case the AI fails or becomes slow.

5. ChatGPT API Integration

This layer formats a prompt, calls the ChatGPT API with the normalized submission, and retrieves a classification (SPAM / LEGIT / SUSPICIOUS).

- Few-shot learning format with past examples

- Uses gpt-3.5-turbo or gpt-4 via OpenAI's API

- Parses the response string safely

- Controlled using temperature = 0 (deterministic output)

6. Score Aggregator / Decision Engine

Once both the heuristics and GPT classification are available, this component computes a final score using weighted rules.

Example:

- If GPT says SPAM → +5

- If heuristics ≥ 3 → +3

- If suspicious terms found → +2

Decision threshold:

- Score ≥ 6 → Reject

- Score between 4 - 5 → Flag

- Score ≤ 3 → Accept

This ensures neither heuristics nor GPT alone makes the final call — it's a hybrid decision.

7. Storage & Logging Layer

Based on the decision, the backend:

- Inserts the submission into the database (if accepted)

- Logs rejected or flagged items into a spam_logs table for future analysis

- Optionally sends notifications or admin alerts for suspicious submissions

Design Considerations

Isolation, performance, fail-safety and extendability.

- GPT API usage is isolated to a service function. It can be skipped, replaced, or mocked during testing.

- GPT calls are only triggered after heuristics pass a threshold (to save cost).

- If the GPT API fails, heuristics alone make the call.

- You can plug in alternate models (Claude, Gemini) or use local models later without architectural changes.

This modular approach ensures you're not tightly coupled to any one detection technique. In the next section, we'll look at how to design the input pipeline and prompt for GPT so it makes consistent, accurate decisions.

III. Designing the Input Pipeline for ChatGPT

Before sending form data to the ChatGPT API, it is crucial to normalize and structure the input effectively. ChatGPT is a language model, not a rules engine. Its response quality depends heavily on how we frame the prompt and clean the incoming data.

1. Normalize and Preprocess the Input

Raw user-submitted form data may include excess whitespace, HTML entities, line breaks, or malicious content. Normalize the input to improve clarity and consistency for the model.

// Sample preprocessing in PHP

$input = trim(strip_tags($formData['message']));

$input = preg_replace('/\s+/', ' ', $input);- Remove HTML tags

- Trim whitespace

- Normalize special characters and emojis (optional)

2. Choose a Prompt Format

Form submissions contain multiple fields. You must serialize them into a readable, unambiguous format for GPT. The most effective style is a labeled flat structure:

Form Submission:

Name: "Daniel"

Email: "daniel@mail.com"

Message: "Check out this link: http://example.com"

Classify: [Your Answer Here]You can optionally add multiple examples above the real input to guide the model. This is known as a few-shot learning approach and helps GPT generalize better.

3. Add Contextual Metadata

While GPT doesn't have access to your environment or headers, you can optionally append metadata like:

- Time taken to submit the form (fast submissions = likely bots)

- IP address (partial or hashed for privacy)

- User agent string or platform (suspicious crawlers can be flagged)

Example prompt with metadata:

Form Submission:

Name: "Buy now!"

Email: "offer@example.com"

Message: "Lowest prices on meds, visit http://example.com"

TimeToSubmit: 2 seconds

UserAgent: Mozilla/5.0

Classify: SPAM4. Handle Language Model Limitations

LLMs can occasionally return unexpected or verbose responses. To reduce this:

- Use

temperature = 0for deterministic output - Instruct the model to reply with one of:

SPAM,LEGIT, orSUSPICIOUS - Sanitize and lower-case the response on your backend

Example instruction appended to prompt:

Only reply with one word: SPAM, LEGIT, or SUSPICIOUS5. Avoid Unnecessary Data

Do not include:

- IP addresses in full (privacy concern)

- Internal user IDs or database fields

- Large irrelevant blobs (e.g., base64 files, HTML templates)

The goal is to provide GPT just enough context to decide based on the content and behavioral metadata.

Summary

- Clean and flatten all input fields

- Frame them in a clear and consistent prompt format

- Add relevant behavioral metadata for context

- Guide GPT with few-shot examples and strict output instructions

In the next section, we'll explore how to engineer prompts and few-shot examples that maximize GPT's classification accuracy for spam detection.

IV. Prompt Engineering: Teaching GPT to Spot Spam

Prompt engineering is the most critical part of integrating ChatGPT for spam detection. The way you frame the input and guide the model with examples will directly influence its accuracy and consistency.

1. Use Few-Shot Classification Prompts

ChatGPT performs better when it is shown a few examples before the actual input. These are called “few-shot” prompts, and they establish a pattern the model will follow.

Example prompt with two training samples:

Form Submission:

Name: "Discount Pharmacy"

Email: "cheapmeds@example.com"

Message: "Order Viagra at 90% off! Visit http://example.com"

TimeToSubmit: 3 seconds

UserAgent: Mozilla/5.0

Classify: SPAM

---

Form Submission:

Name: "Alice Joseph"

Email: "alice.joseph@example.com"

Message: "Hi, I'd like to learn more about your services. Can you call me?"

TimeToSubmit: 42 seconds

UserAgent: Mozilla/5.0

Classify: LEGIT

---

Form Submission:

Name: "Daniel"

Email: "daniel@example.com"

Message: "Hi there, how are you?"

Classify:GPT will see the pattern and continue with a classification based on the structure.

2. Restrict Output Using Clear Instructions

Add a clear instruction to the end of the prompt to restrict the model's output. This ensures reliable post-processing and eliminates verbose or inconsistent replies.

Recommended instruction:

Only reply with one word: SPAM, LEGIT, or SUSPICIOUSYou can add it as the final line in the prompt before sending it to the API.

3. Control Model Parameters

Set the right parameters when calling the ChatGPT API to reduce randomness:

- temperature:

0(for deterministic output) - top_p:

1 - max_tokens:

10(enough for a one-word reply)

4. Handle Uncertainty

In cases where the model may be unsure (e.g., message looks suspicious but not clear spam), instruct it to

respond with SUSPICIOUS. You can treat this category differently in your scoring engine.

Why this matters:

- Too aggressive? You block genuine leads.

- Too lenient? You open doors to sophisticated spam.

5. Keep Prompts Short but Informative

Avoid overloading the prompt with too many examples or metadata. ChatGPT models have a context window, and performance degrades with overly verbose prompts.

Ideal structure:

- 2 - 3 strong training examples

- 1 actual submission input

- Clear instruction for output

6. Example PHP Prompt Generator

Here's a simple PHP function to build the full prompt dynamically:

function buildSpamPrompt(array $input) {

$examples = <<<PROMPT

Form Submission:

Name: "Free Money"

Email: "offer@example.com"

Message: "Click this link now to win!"

TimeToSubmit: 1 second

UserAgent: Mozilla/5.0

Classify: SPAM

---

Form Submission:

Name: "Meera Kumar"

Email: "meera.k@example.com"

Message: "I want to schedule a demo call."

TimeToSubmit: 27 seconds

UserAgent: Mozilla/5.0

Classify: LEGIT

---

PROMPT;

$submission = "Form Submission:\n";

$submission .= "Name: \"" . $input['name'] . "\"\n";

$submission .= "Email: \"" . $input['email'] . "\"\n";

$submission .= "Message: \"" . $input['message'] . "\"\n";

$submission .= "TimeToSubmit: " . $input['timeToSubmit'] . "\n";

$submission .= "UserAgent: " . $input['userAgent'] . "\n\n";

$submission .= "Classify:";

return $examples . $submission . "\n\nOnly reply with one word: SPAM, LEGIT, or SUSPICIOUS";

}Summary

- Use clear and consistent formatting in prompts

- Include a few example submissions with known outcomes

- Use strong instructions to guide the output

- Control randomness using API parameters

- Keep prompt length within context limits

Now that we've crafted the prompt structure, let's integrate this with the ChatGPT API and write code to send and parse responses in the next section.

V. Integration with ChatGPT API (PHP + cURL Example)

With the prompt structure in place, the next step is to connect to the ChatGPT API and process the classification. In this section, we'll write PHP code to:

- Build the final prompt

- Send a request to OpenAI's API using

cURL - Parse and normalize the model's response

- Implement retry and fallback logic

1. API Endpoint and Authentication

OpenAI's chat models are accessed via the https://api.openai.com/v1/chat/completions endpoint.

You'll need an API key from your OpenAI

account.

Store the key securely in an environment file or config constant:

// .env or config.php

define('OPENAI_API_KEY', 'sk-...');2. PHP Function to Call ChatGPT

This function sends a single prompt and returns the classification as SPAM, LEGIT, or

SUSPICIOUS.

function classifyFormSubmission(string $prompt): string {

$url = 'https://api.openai.com/v1/chat/completions';

$headers = [

'Content-Type: application/json',

'Authorization: Bearer ' . OPENAI_API_KEY,

];

$postData = [

'model' => 'gpt-3.5-turbo',

'temperature' => 0,

'max_tokens' => 10,

'messages' => [

[

'role' => 'user',

'content' => $prompt

]

]

];

$ch = curl_init($url);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

curl_setopt($ch, CURLOPT_HTTPHEADER, $headers);

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, json_encode($postData));

$response = curl_exec($ch);

$httpCode = curl_getinfo($ch, CURLINFO_HTTP_CODE);

if (curl_errno($ch)) {

throw new Exception('Curl error: ' . curl_error($ch));

}

curl_close($ch);

if ($httpCode !== 200) {

throw new Exception('OpenAI API error: HTTP ' . $httpCode);

}

$data = json_decode($response, true);

$output = strtolower(trim($data['choices'][0]['message']['content'] ?? ''));

// Normalize the response to one of the expected categories

if (str_contains($output, 'spam')) {

return 'SPAM';

} elseif (str_contains($output, 'suspicious')) {

return 'SUSPICIOUS';

} else {

return 'LEGIT';

}

}3. Retry and Timeout Handling

GPT API can occasionally fail or return a 5xx error. It's good practice to add retry logic with exponential backoff.

function callWithRetry(string $prompt, int $maxAttempts = 3): string {

$delay = 1; // seconds

for ($i = 0; $i < $maxAttempts; $i++) {

try {

return classifyFormSubmission($prompt);

} catch (Exception $e) {

if ($i === $maxAttempts - 1) {

return 'SUSPICIOUS'; // fallback category

}

sleep($delay);

$delay *= 2; // exponential backoff

}

}

}4. Usage Example

Here's how you can plug this into your form handler:

$formData = [

'name' => $_POST['name'] ?? '',

'email' => $_POST['email'] ?? '',

'message' => $_POST['message'] ?? '',

'timeToSubmit' => $_POST['timeToSubmit'] ?? 'unknown',

'userAgent' => $_SERVER['HTTP_USER_AGENT'] ?? 'unknown'

];

$prompt = buildSpamPrompt($formData);

$class = callWithRetry($prompt);

if ($class === 'SPAM') {

// Reject and log

} elseif ($class === 'SUSPICIOUS') {

// Flag for review

} else {

// Accept and store

}5. Safety Tips

- Use HTTPS for all API requests

- Do not expose API keys on frontend

- Set a timeout (e.g., 5 seconds) for cURL

- Validate all user input before sending to the API

With the API now wired into your backend, the next step is to combine this classification result with heuristic signals to build a more reliable hybrid spam scoring engine.

VI. Hybrid Scoring Model

While ChatGPT can provide powerful language-based classification, relying on it alone is not enough. Especially when aiming for production-level reliability, speed, and cost control.

A hybrid scoring model combines the strength of:

- Heuristic rules: fast and deterministic signals based on patterns

- ChatGPT classification: nuanced interpretation of message content

This layered defense reduces false positives and lowers API usage costs.

1. Heuristic Signals

Each rule adds a fixed score when triggered. You can customize the values based on your dataset.

$score = 0;

// Example heuristic rules

if (substr_count($formData['message'], 'http') > 1) {

$score += 2;

}

if (preg_match('/(free|offer|buy now|click here)/i', $formData['message'])) {

$score += 2;

}

if ($formData['timeToSubmit'] <= 3) {

$score += 1;

}

if (in_array(parse_url($formData['email'], PHP_URL_HOST), ['mailinator.com', '10minutemail.com'])) {

$score += 2;

}You can maintain a central config for weights:

$rules = [

'too_many_links' => 2,

'spam_keywords' => 2,

'fast_submit' => 1,

'disposable_email' => 2,

];2. Scoring ChatGPT Output

Once you receive the GPT classification (SPAM, LEGIT, or SUSPICIOUS),

convert it to a numeric score to merge with the heuristics.

switch ($gptResult) {

case 'SPAM':

$score += 5;

break;

case 'SUSPICIOUS':

$score += 2;

break;

case 'LEGIT':

default:

$score += 0;

}3. Final Decision Thresholds

Define thresholds based on total score. Example rules:

- Score ≥ 6: Reject

- Score 4 - 5: Flag for manual review

- Score ≤ 3: Accept

if ($score >= 6) {

$status = 'REJECT';

} elseif ($score >= 4) {

$status = 'FLAG';

} else {

$status = 'ACCEPT';

}4. Full Example Integration

// Assume $formData and $gptResult are already available

$score = 0;

// Apply heuristics

if (substr_count($formData['message'], 'http') > 1) $score += 2;

if (preg_match('/free|buy|offer/i', $formData['message'])) $score += 2;

if ($formData['timeToSubmit'] <= 3) $score += 1;

// Add GPT score

$score += match($gptResult) {

'SPAM' => 5,

'SUSPICIOUS' => 2,

default => 0

};

// Decision

if ($score >= 6) {

logAndReject($formData);

} elseif ($score >= 4) {

flagForReview($formData);

} else {

saveToDatabase($formData);

}5. Benefits of Hybrid Approach

- Protects against LLM misclassification

- Lets you skip GPT calls for obviously bad/good inputs

- More customizable for your niche domain or audience

- Heuristics are fast and cost nothing

This scoring engine acts as the final gatekeeper. In the next section, we'll optimize cost and performance by selectively calling GPT only when needed.

VII. Caching & Cost Optimization

Integrating ChatGPT into your spam detection pipeline comes with two primary concerns:

- Latency: Each API call takes 0.5 to 1.5 seconds depending on the prompt

- Cost: OpenAI charges per token, and costs can add up quickly at scale

To make your system efficient and scalable, it's important to minimize unnecessary calls to the GPT API using intelligent caching and conditional invocation strategies.

1. Avoid Reprocessing Duplicate Submissions

Many spam bots send the same message repeatedly. You can avoid redundant GPT calls by caching a hash of the message content.

$fingerprint = hash('sha256', strtolower($formData['message']));

$cached = checkSpamCache($fingerprint); // Check Redis/MySQL/file cache

if ($cached) {

$gptResult = $cached;

} else {

$prompt = buildSpamPrompt($formData);

$gptResult = callWithRetry($prompt);

saveToSpamCache($fingerprint, $gptResult);

}Keep cache entries for 7 to 30 days depending on your spam volume.

2. Skip GPT for Obvious Spam or Legitimate Inputs

If the heuristic score is clearly high or low, there's no need to call the LLM. Define pre-check logic:

if ($heuristicScore >= 6) {

$gptResult = 'SPAM'; // Bypass GPT

} elseif ($heuristicScore <= 1) {

$gptResult = 'LEGIT'; // Bypass GPT

} else {

$prompt = buildSpamPrompt($formData);

$gptResult = callWithRetry($prompt);

}This “threshold window” drastically reduces GPT API usage while maintaining confidence.

3. Cost Estimation Example

Assume you receive 1,000 submissions per day:

- 80% are obvious (skip GPT)

- 20% go to GPT (~200 calls)

- Each call costs ≈ $0.002 to $0.004 using

gpt-3.5-turbo

Estimated monthly cost: $12 to $24

With caching + threshold logic, this could be brought down further by 50 to 70%.

4. Use Local Caching Layers

- Redis: In-memory cache for high-throughput systems

- MySQL: If Redis is not available

- Filesystem: For low-volume apps, cache to JSON files or SQLite

5. Token Optimization

You are billed based on tokens in the prompt + completion. To reduce token usage:

- Limit training examples to 1 to 2

- Exclude unnecessary metadata

- Use compact field names if needed

- Keep

max_tokenslow (e.g., 10)

6. Optional: Model Selection

Use gpt-3.5-turbo by default. Only switch to gpt-4 for extremely ambiguous cases, or

add a second-layer check.

Summary

- Use fingerprint caching to eliminate duplicate processing

- Skip GPT for clearly good/bad submissions

- Cache GPT results short-term to reduce cost

- Trim prompt size and use

temperature: 0to reduce token waste

In the next section, we'll look at securing this integration with API abuse protections and fallback logic to handle GPT outages.

VIII. Security, Abuse Prevention & Fail Safes

When you integrate external APIs like ChatGPT into your form backend, security and stability become critical. You must protect your system from:

- Exposing API keys

- Malicious overuse (both human and bot-driven)

- Downtime or failures in the OpenAI API

Let's break down practical safeguards to implement in your system.

1. Secure Your OpenAI API Key

- Never embed keys in frontend code

- Store API keys in

.envfiles or server-side config - Use file permissions to restrict read access

- If deploying on cloud, store secrets using encrypted secrets managers

// config.php

define('OPENAI_API_KEY', getenv('OPENAI_API_KEY'));2. Protect the Form Endpoint from Abuse

Your form should not be an open gateway for spamming the GPT API. Enforce input throttling using one or more of the following:

- IP rate limits: e.g., 10 submissions/hour/IP

- Session lock: prevent multiple rapid-fire submissions in a row

- Honeypot or time-based trap fields: basic bot traps

Sample IP throttle in PHP (using Redis or DB):

$ip = $_SERVER['REMOTE_ADDR'];

$key = 'form_attempts:' . $ip;

$attempts = getRateLimitCounter($key);

if ($attempts >= 10) {

http_response_code(429);

exit('Rate limit exceeded');

}3. GPT Call Budget Quota

Set a soft limit per day for total GPT calls. If usage spikes, block further API calls temporarily and rely solely on heuristics or flag for manual review.

if (getTodayGptCallCount() >= DAILY_GPT_LIMIT) {

// Skip GPT, rely on fallback

$gptResult = 'SUSPICIOUS';

}4. Fallback Logic for GPT Outages

GPT API may be down, slow, or throw rate-limit errors. Always have a fallback plan:

- Heuristic-only mode: skip GPT and rely on rules

- Temporary allowlisting: allow certain IPs or domains

- Flag submissions for admin review: instead of hard-blocking

Example fallback handler:

try {

$gptResult = callWithRetry($prompt);

} catch (Exception $e) {

logError("GPT failed: " . $e->getMessage());

$gptResult = 'SUSPICIOUS'; // conservative fallback

}5. Logging and Auditing

Maintain a log of every GPT call and its classification. Log enough data for debugging, but avoid storing sensitive information (like full email content).

- Form hash or ID

- Timestamp

- Classification

- Response time

- Error message (if any)

CREATE TABLE spam_logs (

id INT AUTO_INCREMENT PRIMARY KEY,

form_hash VARCHAR(64),

classification VARCHAR(20),

gpt_latency_ms INT,

status VARCHAR(20),

error_message TEXT,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);6. Review Dashboard Access Control

If you're building an admin UI for flagged/suspicious entries, protect it behind login and role-based access. Do not expose raw user content without authentication.

Summary

- Store API keys securely using environment variables

- Throttle users to prevent abuse of form + GPT backend

- Plan for OpenAI API failures with fallback logic

- Log and audit GPT classifications for traceability

- Build review tools behind proper access controls

In the next section, we'll explore logging and how to build an internal dashboard to review flagged submissions and improve the model's accuracy over time.

IX. Logging & Admin Dashboard Suggestions

Once your spam detection system is live, maintaining visibility is key. You'll want to track what the model is classifying, identify misclassifications, and improve your heuristics or prompt examples over time.

A lightweight logging and dashboard layer will allow you (or your clients) to review suspicious or blocked form entries without digging through raw logs or the database directly.

1. What to Log

Every form submission that touches the spam filter should be logged. Store the following fields:

- form_hash: unique hash of the submission (e.g., sha256 of message)

- classification: GPT result (LEGIT / SPAM / SUSPICIOUS)

- heuristic_score: numeric score (0 to X)

- final_decision: ACCEPT / FLAG / REJECT

- latency: GPT response time (ms)

- error: exception message if GPT failed

- created_at: timestamp of submission

CREATE TABLE spam_logs (

id INT AUTO_INCREMENT PRIMARY KEY,

form_hash VARCHAR(64),

classification VARCHAR(20),

heuristic_score INT,

final_decision VARCHAR(20),

gpt_latency_ms INT,

error_message TEXT,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);2. Optional: Store Redacted Form Fields

To comply with privacy or GDPR, avoid storing full user submissions. Instead, store only hashes or redacted/partial data.

$log = [

'form_hash' => hash('sha256', $formData['message']),

'classification' => $gptResult,

'heuristic_score' => $score,

'final_decision' => $decision,

'gpt_latency_ms' => $latency,

'error_message' => $error ?? null

];3. Admin Dashboard: Key Features

Build a simple internal interface (PHP or React) to inspect logged data. Focus on:

- List of recent flagged or rejected entries (filterable by date, type)

- View decision history: what score, what GPT said, what was the result

- Mark as override: allow admin to reclassify false positives

- Export logs: CSV or JSON for offline analysis

Sample dashboard table view:

| Date | Classification | Heuristic | Decision | Latency | View |

|---|---|---|---|---|---|

| 2025-07-04 | SPAM | 7 | REJECT | 984 ms | Details |

| 2025-07-04 | SUSPICIOUS | 3 | FLAG | 523 ms | Details |

4. Review Feedback Loop (Optional)

If you have human reviewers or admin users reclassifying entries, feed those results back to:

- Refine your heuristic weights

- Add better few-shot examples in your GPT prompt

- Whitelist known IPs/domains

Over time, this review loop strengthens the system's precision and reduces false flags.

5. Access Control

- Restrict access to log views and dashboard via role-based login

- Do not expose raw GPT prompts or user messages publicly

- Use encrypted connection (HTTPS + secure sessions)

Summary

- Log every GPT classification and heuristic score with metadata

- Build an internal dashboard to review, audit, and override decisions

- Allow feedback to improve your system continuously

- Protect access to logs with proper authentication

In the final section, we'll summarize the full flow and highlight some production readiness tips for deploying this in a live environment.

X. Deployment Checklist & Final Thoughts

With the full pipeline built—from input preprocessing to GPT classification and hybrid scoring—you're ready to deploy. But before going live, it's essential to audit your setup for speed, reliability, and security.

1. Deployment Checklist

- ✅ Environment separation: Use test and production API keys separately

- ✅ API key security: Stored in server-side config, not exposed to frontend

- ✅ Rate limiting: Form endpoint protected by IP/session throttles

- ✅ Caching layer: Redis, SQLite or MySQL cache to avoid duplicate GPT calls

- ✅ Error handling: Fallback if GPT API fails (heuristics + logs)

- ✅ Logging: All GPT classifications and decisions are stored

- ✅ Admin tools: Internal dashboard to audit and reclassify if needed

- ✅ Output constraint: Model instructed to reply with single-word result

- ✅ Prompt optimization: Short, deterministic prompts with max 2 examples

- ✅ Cost control: GPT usage thresholds in place, skip for clear cases

2. Optional Enhancements

- Train a small ML model using reviewed data for long-term cost savings

- Use OpenAI's

logprobs(if available) to score confidence of GPT decisions - Add support for multiple languages by translating prompts before classification

- Integrate OpenAI moderation endpoint as a secondary filter

3. When Not to Use GPT

GPT is powerful but not always necessary. Avoid using it for:

- Ultra-high-volume spam (e.g., comment sections or email lists)

- Real-time UI validations (due to latency)

- Use cases where deterministic filtering is legally required

Final Thoughts

Language models like ChatGPT offer a new frontier for intelligent spam detection. With a well-designed prompt and a hybrid scoring system, they can drastically reduce junk submissions while preserving genuine leads. When deployed with care, caching, and fallback protections, this approach is not only technically sound but also production-ready.

Form spam is a solved problem at the surface, but GPT gives us deeper insight into intent, tone, and semantic trickery—something that regular filters can't decode. This article has shown how to treat ChatGPT not as a magic box, but as a programmable engine—one that, when integrated carefully, can act as a natural language firewall for your forms.

I've implemented a slightly customized version of this system in my SaaS product, FormApe.com, a free online form builder, to help protect my customers from spam submissions.

Thanks for reading. If you enjoyed this deep dive, consider exploring:

- How to fine-tune GPT for domain-specific content moderation

- Prompt chaining for smarter lead qualification

- Serverless GPT-based microservices in PHP or Node.js